Introduction

The Outbreak

The 2014 Ebola outbreak in West Africa is an ongoing public health crisis, which has killed hundreds of people so far. The cross-border nature of this epidemic, which has emerged in Gunea, Liberia and Sierra Leone has complicated mitigation efforts, as has the poor health infrastructure in the region. While there has been much analysis and speculation about the factors at play in the spread of the virus, to our knowledge there aren't any specific predicitons about the expected duration and severity of this particular epidemic. In the (certainly temporary) absence of epidemic forecasts, this document explores a simple spatial SEIR model to make some initial predictions.

The Data

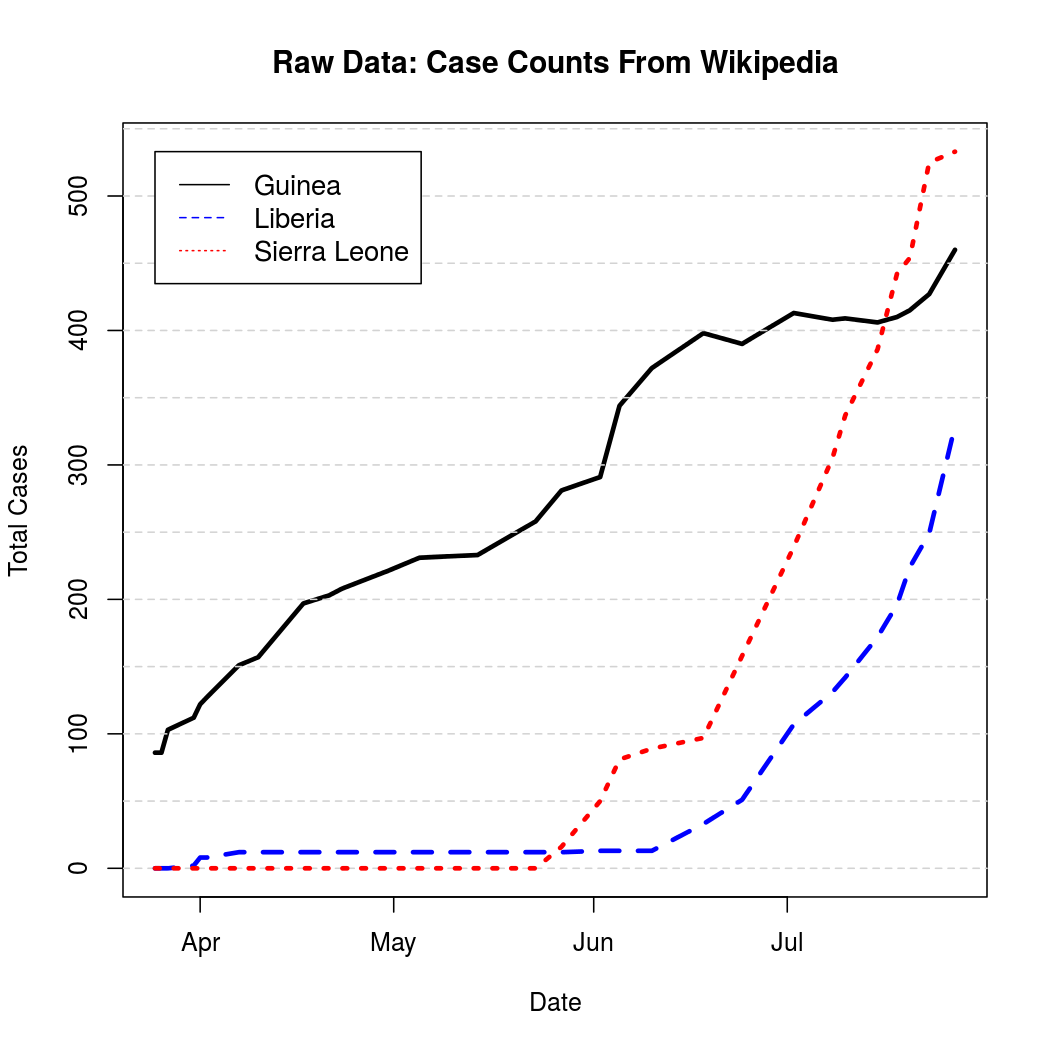

A summary of the WHO case reports is very helpfully compiled on wikipedia. It can be easily read into R with the xml library:

With data in hand, let's begin where every analysis should begin: graphs.

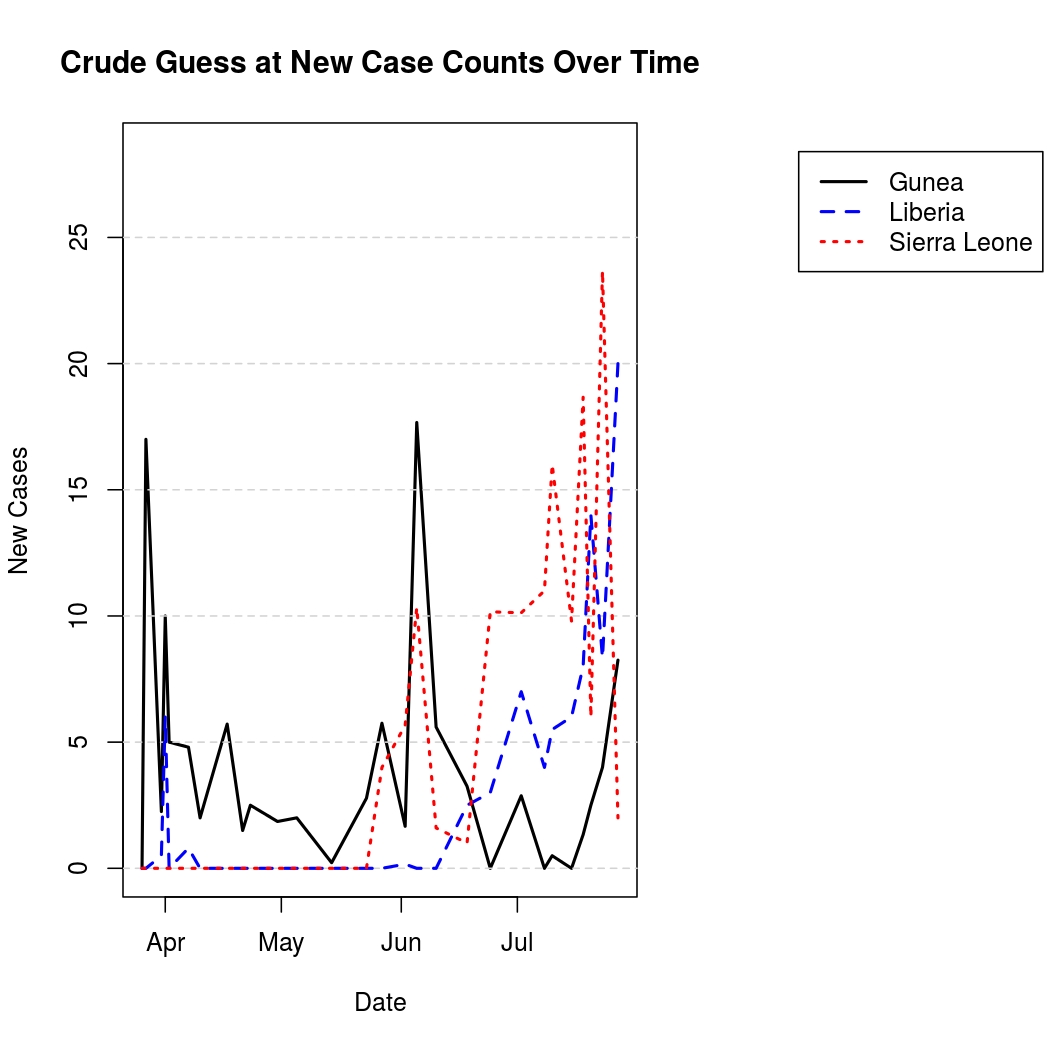

These represent cumulative counts, but because case reports can be revised downward due to non-Ebola illnesses the graphs are not monotone. A quick, but effective solution to this problem is to simply "un-cumulate"* the data and bound it at zero to get a rough estimate of new case counts over time.

*Unlike uncumulate, decumulate is actually a word. Unfortunately it just means "to decrease", and so was unsuitable for use here. There should probably be a word for uncumulating things, perhaps uncumulate.

For better graphical representation, the "un-cumulated" counts are scaled to represent average number of infections per day, and linearly interpolated. The process is a bit noisier from this perspective when compared to the original cumulative counts.

One can also represent this data geographically to get an idea of the spatial epidemic pattern, and to place the problem in a more relatable context.

Average Number of Infections Per Day:

Compartmental Models

Now that the data is read in (and now that we have several plots to suggest that we haven't done anything to terribly stupid with it) , let's do some compartmental epidemic modeling. Not only has Ebola been well modeled in the past using compartmental modeling techniques, but this author happens to be working on a software library designed to fit compartmental models in the spatial SEIRS family. What a strange coincidence! Specifically, we'll be using heirarchical Bayesian estimation methods to fit a spatial SEIR model to the data.

While a full treatment of this field of epidemic modeling is (far) beyond the scope of this writing, the basic idea is pretty intuitive. In order to come up with a simplified model of a disease process, discrete disease states (aka, compartments) are defined. The most common of these are S, E, I, and R which stand for:

- Susceptible to a particular disease

- Exposed and infected, but not yet infectious

- Infectious and capable of transmitting the disease

- Removed or recovered

This sequence, traversed by members of a population (S to E to I to R), forms what we might call the temporal process model of our analysis. This analysis belongs to the stochastic branch of the compartmental modeling family, which has its roots in deterministic systems of ordinary and partial differential equations. In the stochastic framework, transitions between the compartments occur according to unknown probabilities. It is the S to E probability, which captures infection activity, into which we introduce spatial structure. Some details of this are given as comments to the code below, and more information than you probably want on the statistical particulars is available in this pdf document. For now, suffice it to say that we'll place a simple spatial structure on the epidemic process which simply allows disease to spread between the three nations involved, and we'll try to estimate the strength of that relationship. Many other potential structures are possible, limited primarily by the amount of additional research and data compilation one is willing to do.

For the purposes of this analysis, we will not do anything fancy with demographic information or public health intervention dates. Demographic parameters are relatively difficult to estimate here, as there are only three spatial units which are all from the same region. Intervention dates are more promising, but their inclusion requires much more background research than we have time for here. In the interest of simplicity and estimability, we'll just fit a different disease intensity parameter for each of the three countries to capture aggregate differences in Ebola susceptibility in addition to using a set of basis functions to capture the temporal trend.

Analysis 1

Set Up

There are some things we need to define before we can start fitting models and making predictions.

- The population sizes need to be determined.

- Initial values for the four compartments must be determined.

- The time points are not evenly spaced, so we need to define appropriate offset values to capture the amount of aggregation performed (time between reports).

- We must define the spatial correlation structure.

- A set of basis functions needs to be chosen to capture the temporal trend.

- Prior parameters and parameter staring values must be specified for each chain.

- A whole bunch of bookkeeping stuff for which I haven't yet programmed sensible default behavior needs to be set up.

Compartment starting values follow the usual convention of letting the entire initial population be divided into susceptibles and infectious individuals. The starting value for the number of infectious individuals was 86, the first infection count available in the data. Offsets are actually calculated in the first code block (above) as the differences between the report times. For temporal basis functions, orthogonal polynomials of degree three were used. Prior parameters for the E to I and I to R transitions were chosen based on well documented values for the average latent and infectious times, and the rest of the prior parameters were left vague. These decisions are addressed in more detail as comments to the code below.

With the set up out of the way, we can finally build the models. In order to assess convergence, we'll make three model objects - one for each MCMC run.

With the model objects created, we may perform some some short runs in order to choose sensible Metropolis tuning parameters. The following script uses the runSimulation function defined in the previous code block to do just that.

Using these tuning parameters, we can now run the chains until convergence. As before, we'll adjust the tuning parameters along the way. Astute readers may notice the frankly inconvenient number of samples requested, and will correctly infer that autocorrelation is currently a major problem for this library. While such autocorrelation does not impact the validity of estimates based on the converged chains, it does increase the required computation time. This is an area of active development for libspatialSEIR.

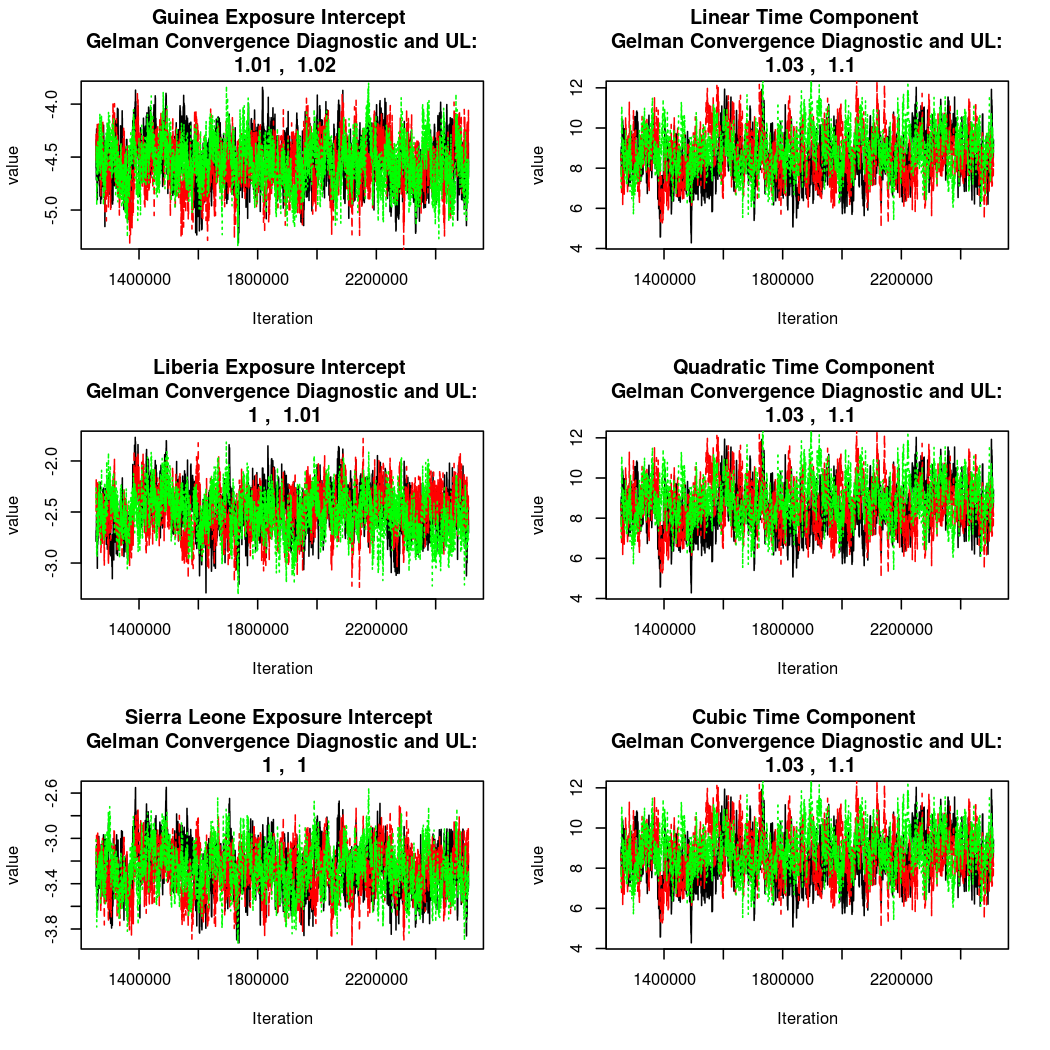

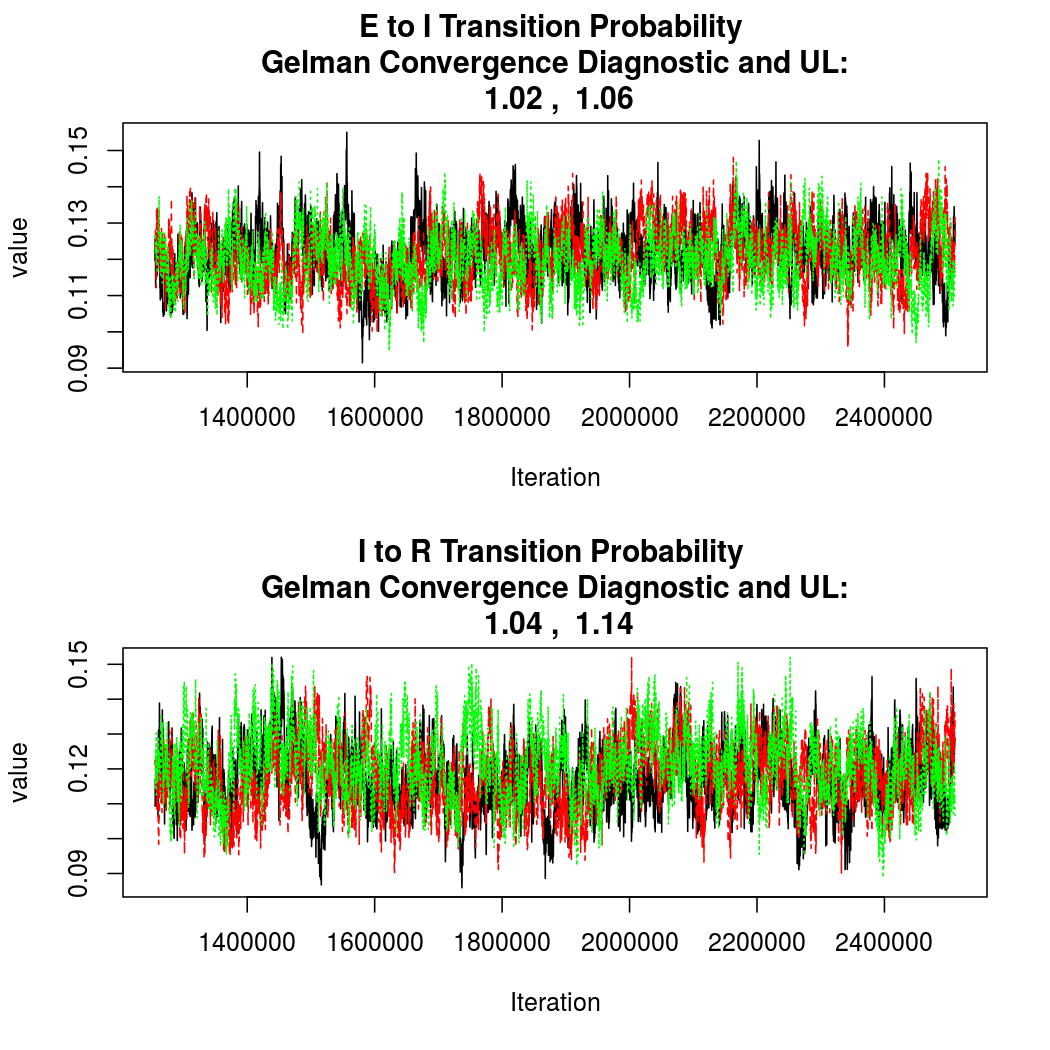

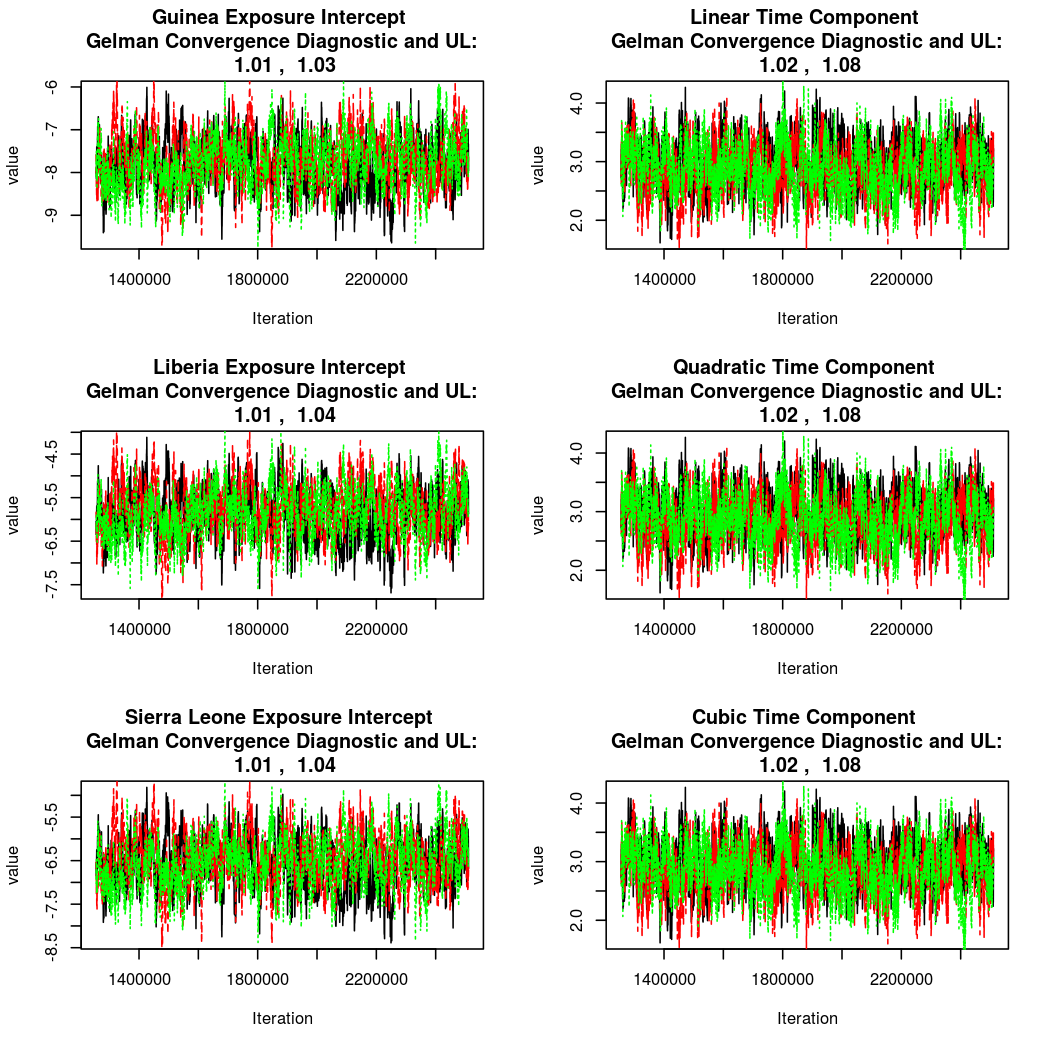

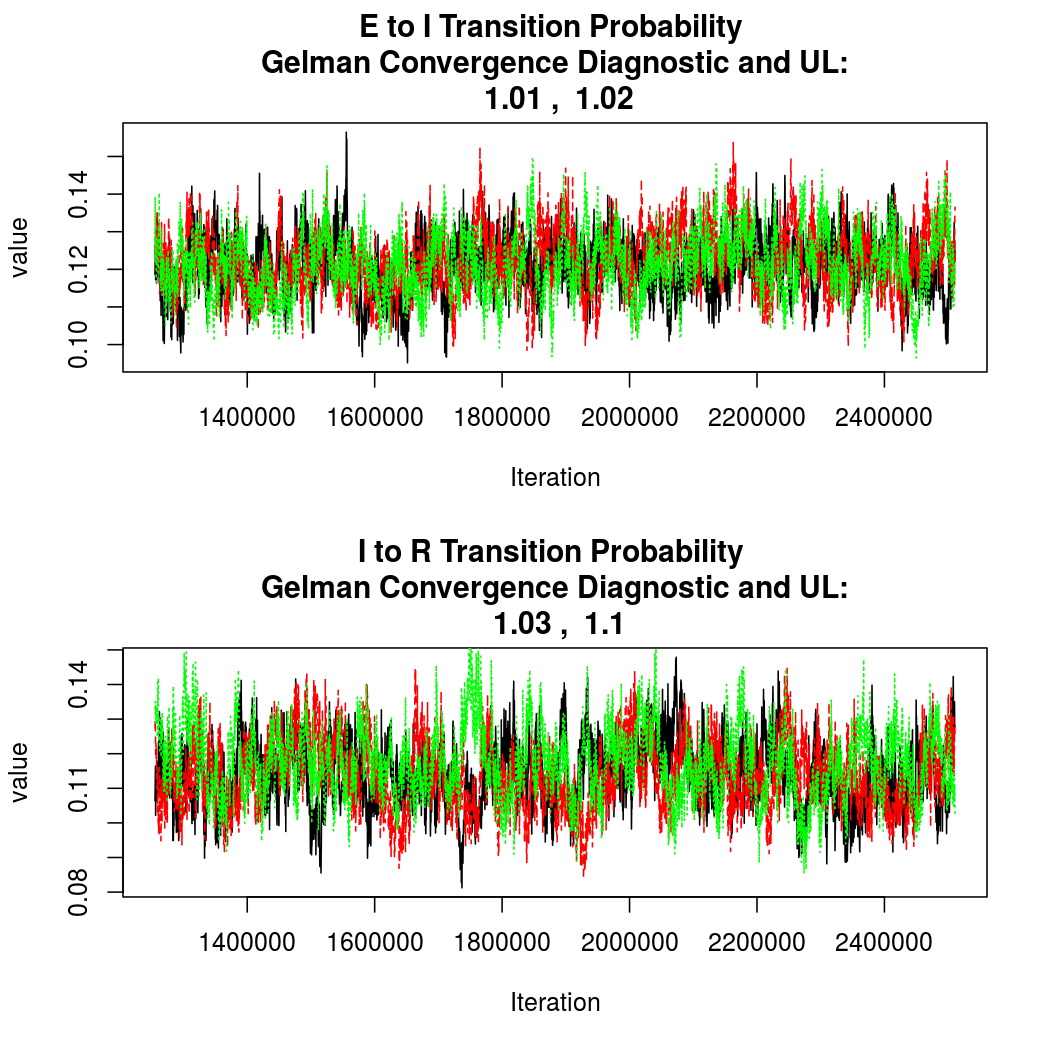

As this is a Bayesian analysis in which the posterior distribution is sampled using MCMC techniques, we really need some indication that the samplers have indeed converged to the posterior distribution in order to make any inferences about the problem at hand. In the code below, we'll read in the MCMC output files created so far, plot the three chains for each of several important parameters, and take a look at the Gelman and Rubin convergence diagnostic (which should be close to 1 if the chains have converged.)

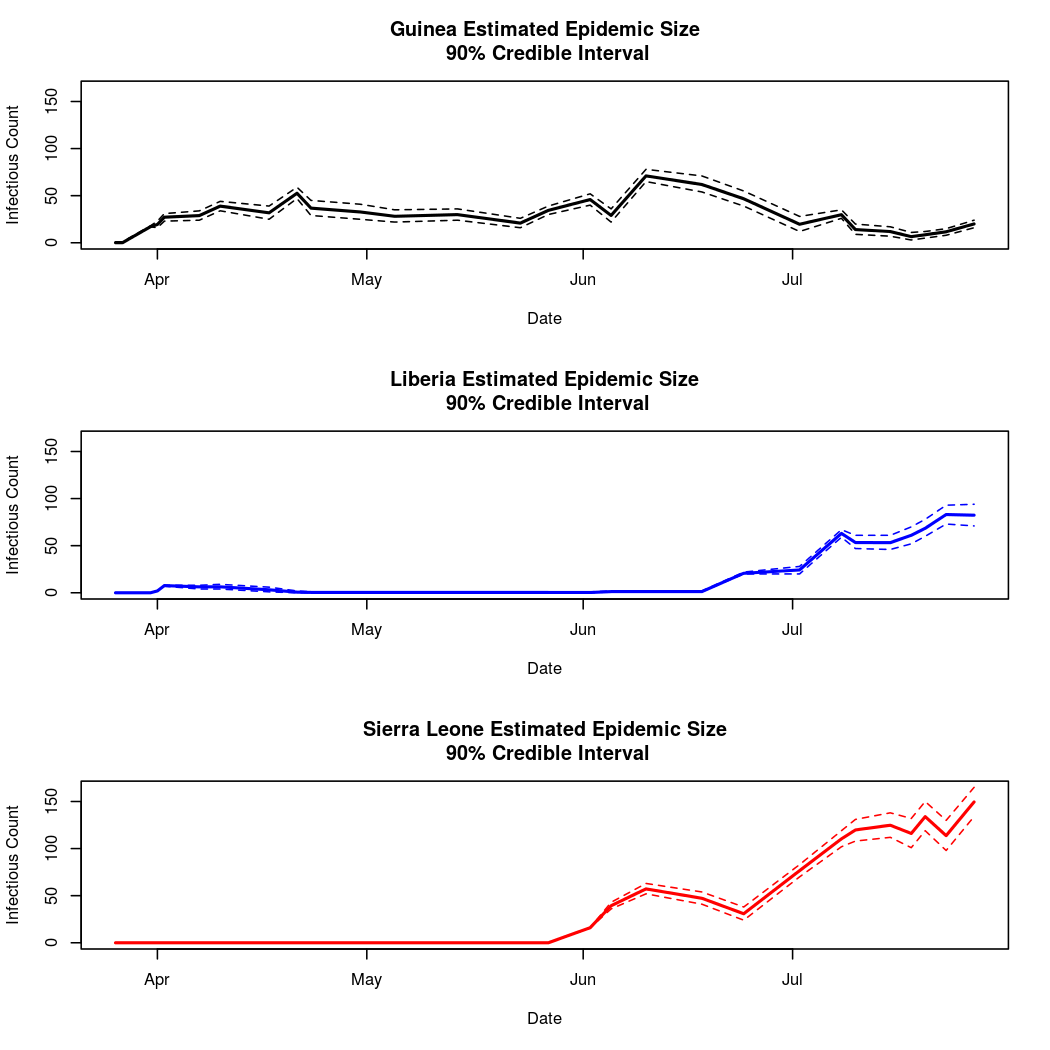

The convergence looks quite reasonable, so let's dissect the estimates a bit.

Estimated Epidemic Behavior

## Output from coda library summary: ## ########################

## ## Iterations = 1:5023 ## Thinning interval = 1 ## Number of chains = 3 ## Sample size per chain = 5023 ## ## 1. Empirical mean and standard deviation for each variable, ## plus standard error of the mean: ## ## Mean SD Naive SE Time-series SE ## Guinea Intercept -4.548 0.22017 1.79e-03 0.010780 ## Liberia Intercept -2.498 0.21236 1.73e-03 0.011243 ## Sierra Leone Intercept -3.274 0.19887 1.62e-03 0.008802 ## Linear Time Component 8.676 1.13028 9.21e-03 0.056190 ## Quadratic Time Component -9.288 0.75167 6.12e-03 0.031001 ## Cubic Time Component 4.895 0.50215 4.09e-03 0.034313 ## Spatial Dependence Parameter 0.228 0.02653 2.16e-04 0.001191 ## E to I probability 0.121 0.00776 6.32e-05 0.000377 ## I to R probability 0.118 0.00958 7.81e-05 0.000485 ## ## 2. Quantiles for each variable: ## ## 2.5% 25% 50% 75% 97.5% ## Guinea Intercept -4.979 -4.699 -4.547 -4.395 -4.127 ## Liberia Intercept -2.922 -2.641 -2.494 -2.350 -2.096 ## Sierra Leone Intercept -3.667 -3.408 -3.272 -3.137 -2.895 ## Linear Time Component 6.533 7.907 8.652 9.422 10.933 ## Quadratic Time Component -10.766 -9.809 -9.274 -8.771 -7.848 ## Cubic Time Component 3.882 4.557 4.909 5.251 5.829 ## Spatial Dependence Parameter 0.177 0.209 0.227 0.245 0.281 ## E to I probability 0.106 0.116 0.121 0.126 0.137 ## I to R probability 0.100 0.112 0.118 0.125 0.138

The average time spent in a particular disease compartment is just one divided by the probability of

a transition between compartments. The units here are days, so we can see that the average infectious time is estimated to be between

7.3

and

10

days, while the average latent time is most likely between

7.3

and

9.4

(95% credible intervals). In reality, there is a lot

of variability in these times for Ebola, but these seem like reasonable estimates for the average values.

We also notice that there is definitely non-zero spatial dependence (the distribution of the spatial dependence parameter is well separated from zero), indicating significant mixing between the populations. This is unsurprising, as the disease has in fact spread between all three nations.

It also appears that Guinea has the lowest estimated epidemic intensity, followed by Sierra Leone and Liberia, which have similar credible intervals for their intercept parameters.

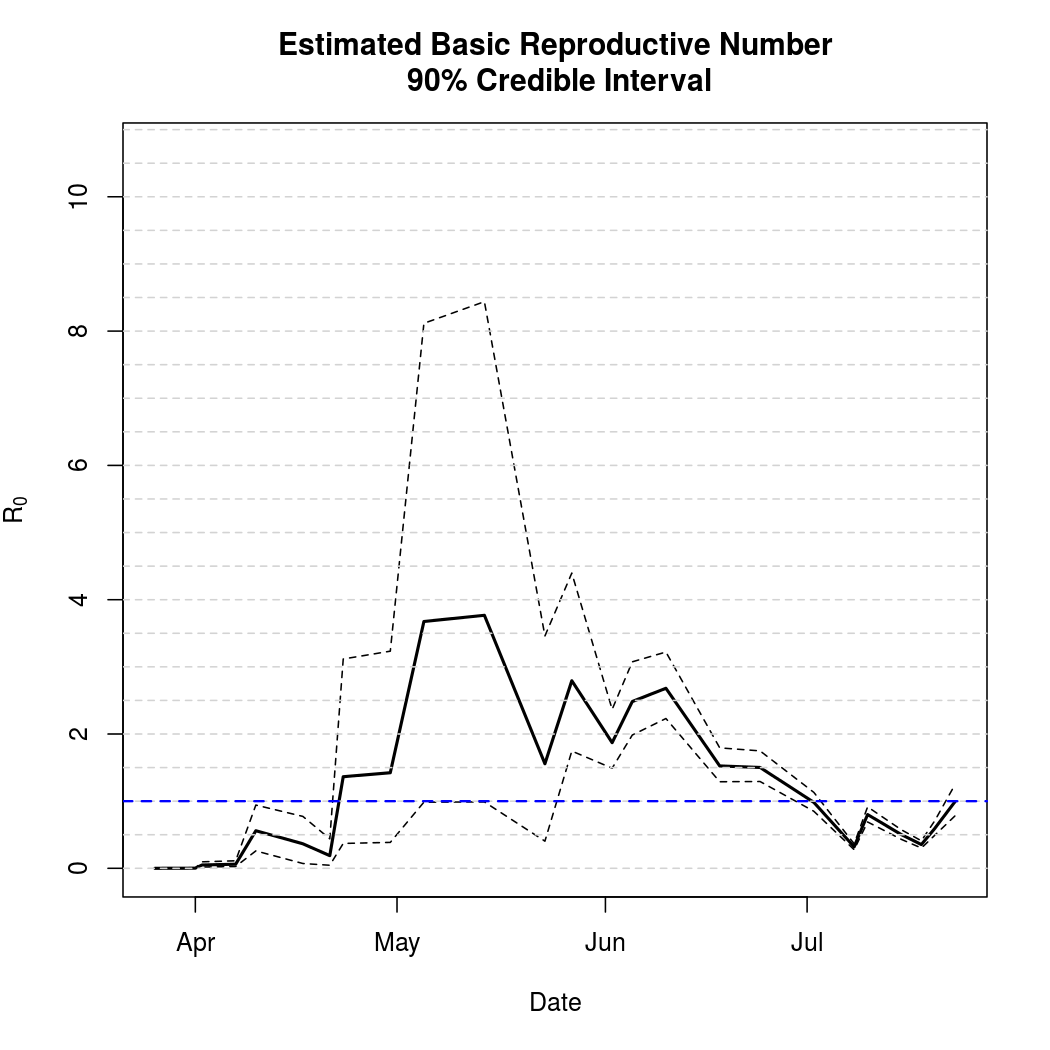

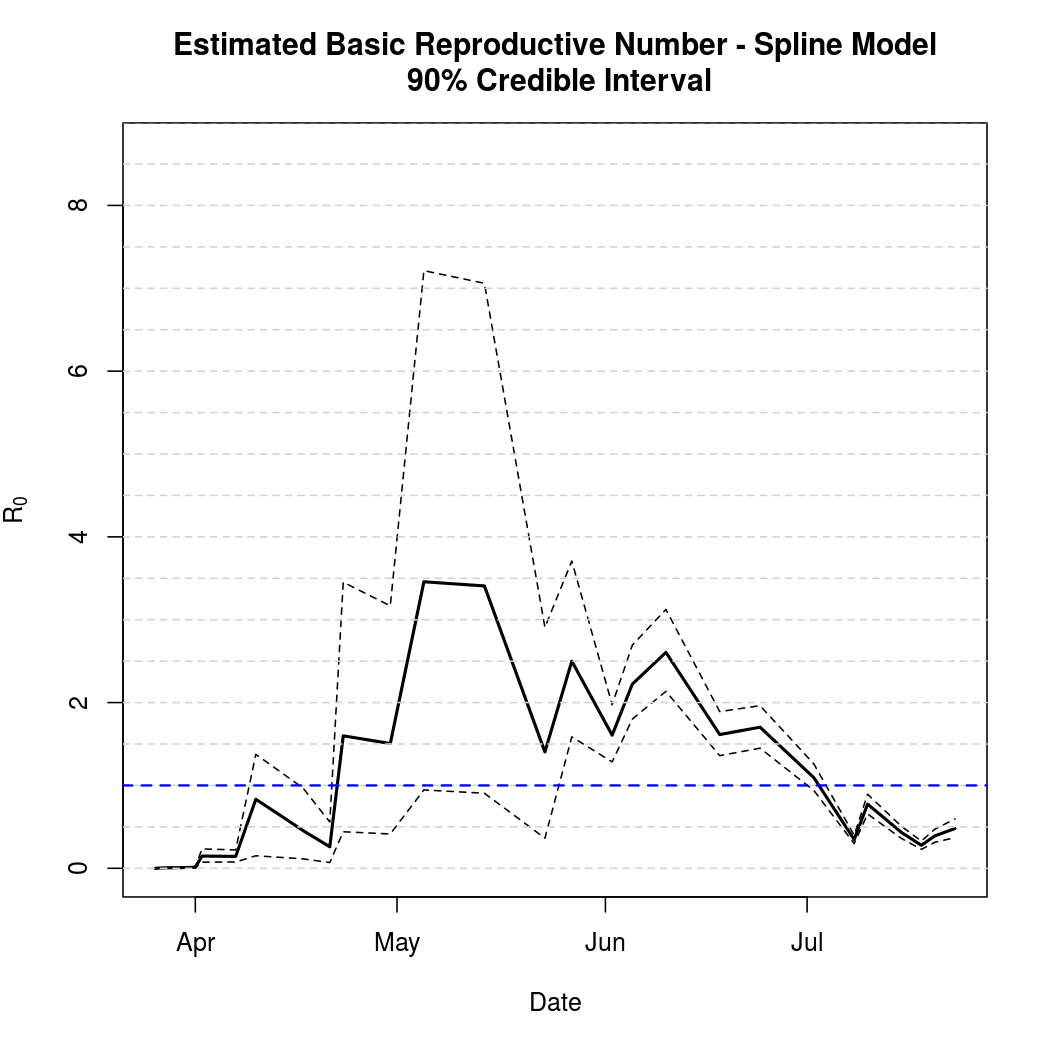

Basic Reproductive Number Calculation

A common tool for describing the evolution of an epidemic is a quantity known as the basic reproductive numer, the basic reproductive ratio, or one of several other variants on that theme. The basic idea is to quantify how many secondary infections a single infectious individual is expected to cause in a large, fully susceptible population. Naturally, when this ratio exceeds one we expect the epidemic to spread. Conversely, a basic reproductive number less than one indicates that a pathogen is more likely to die out. This software library doesn't yet compute the ratio automatically, but does provide what's known as the "next generation matrix" which can be used to quickly calculate the quantity.

In addition to coming up with a point estimate of the ratio, it is helpful to quantify the uncertainty in the estimates obtained. The code below draws additional samples from the posterior distribution in order to estimate this uncertainty.

While the basic reproductive number is a useful quantity to know, it does not directly make any predictions about future epidemic behavior. In order to do that, we need to simulate epidemics based on the MCMC samples we have obtained and summarize their variability over time.

Epidemic Prediction

Currently the simulation required for epidemic prediction must be done "manually" by writing a bunch of R code. As the library develops, a simpler prediction interface is a high priority.

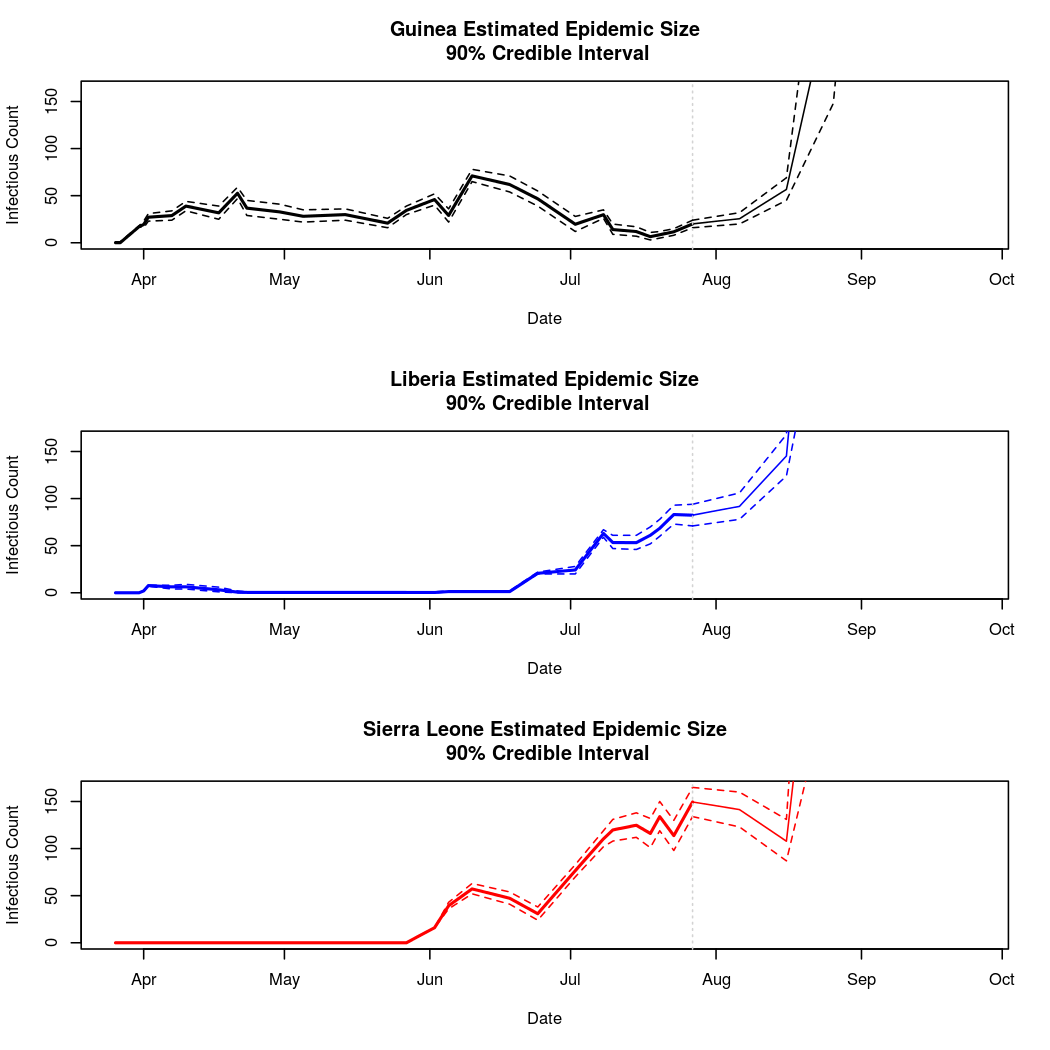

Below, we will attempt to predict the course of the epidemic through early fall. We must be cautious when making predictions about a chaotic process this far into the future. We must be particularly cautious because the basis chosen for the temporal trend in the epidemic intensity process was polynomial. While polynomial bases often provide a good fit to the data, they can behave unreasonably outside the range over which the model was fit (quadratic and cubic terms can get large very quickly).

It looks like our worries about polynomial basis functions were well founded. While these predictions are likely acceptable for several days or weeks after the currently available data, they clearly become dominated by higher order polynomial terms as time goes on. The behavior of the epidemic so far does not support these large swings in epidemic behavior, so we can be fairly certain that these long term predictions are extrapolation errors. Analysis 2 will consider the results of using a natural spline basis for this process instead. Spline bases extrapolate linearly, and so are less prone to extreme extrapolation errors.

Analysis 2: Spline Basis

Set Up

The problem with polynomial basis functions is that they extrapolate poorly, exhibiting extreme behavior under prediction. On the other hand, they often perform quite well for estimation purposes and prediction within the range of observed data. For this reason, and because spline basis coefficients are somewhat difficult to interpret, analysis 2 will not repeat the qualitative interpretation work presented above. Parameter estimates are available below for completeness.

Convergence

Again, convergence looks quite good:

Basic Reproductive Number Calculation

The estimated basic reproductive number and associated variability is virtually unchanged:

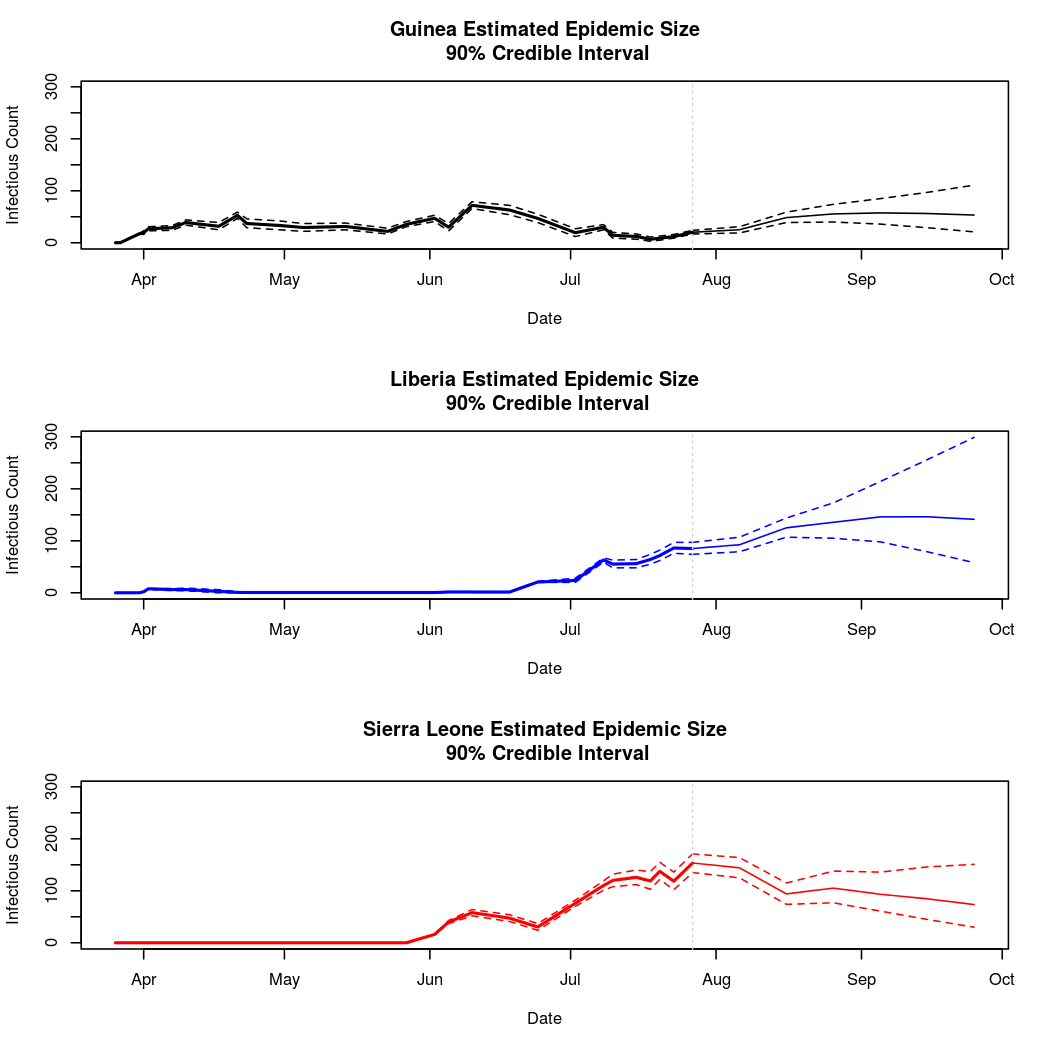

Epidemic Prediction

Here we see the most prominant difference between the two approaches. Namely, these predictions appear more reasonable. Such data can also be visualized in map form:

Total Infection Size - Estimated and Predicted:

Conclusions

As the two sets of basis functions give similar answers in the near future, it seems likely that the epidemic will continue at a steady or slightly increasing rate for at least the next few weeks, though we must still be careful projecting too far into the future. Unfortunately, the most recent data had a fairly pronounced effect on the variability of these estimates. From early July through the data released on the 23rd, the models predicted a temporary continuation of epidemic activity followed by a decrease into the fall. While these predictions are still encompased by the observed variability, the situation on the ground is clearly evolving quickly. The current evidence is not inconsistent with the possibility that the disease will soon start the slow process of dying out, but our best predictions no longer place a high weight on such a possibility. The next few weeks are likely to be highly dynamic, and will hopefully narrow down the large space of probable epidemic patterns. In the meantime, we may hope for the best for the people of West Africa, and support the efforts of governmental and non-governmental organizations like the WHO and MSF

That wraps up the analyses for now. This document will continue to be updated as the epidemic progresses, reflecting new data and perhaps additional analysis techniques. As the document is tracked via source control it will be easy to see how well past predictions held up and how they change in response to new information. Questions and comments can be shared here